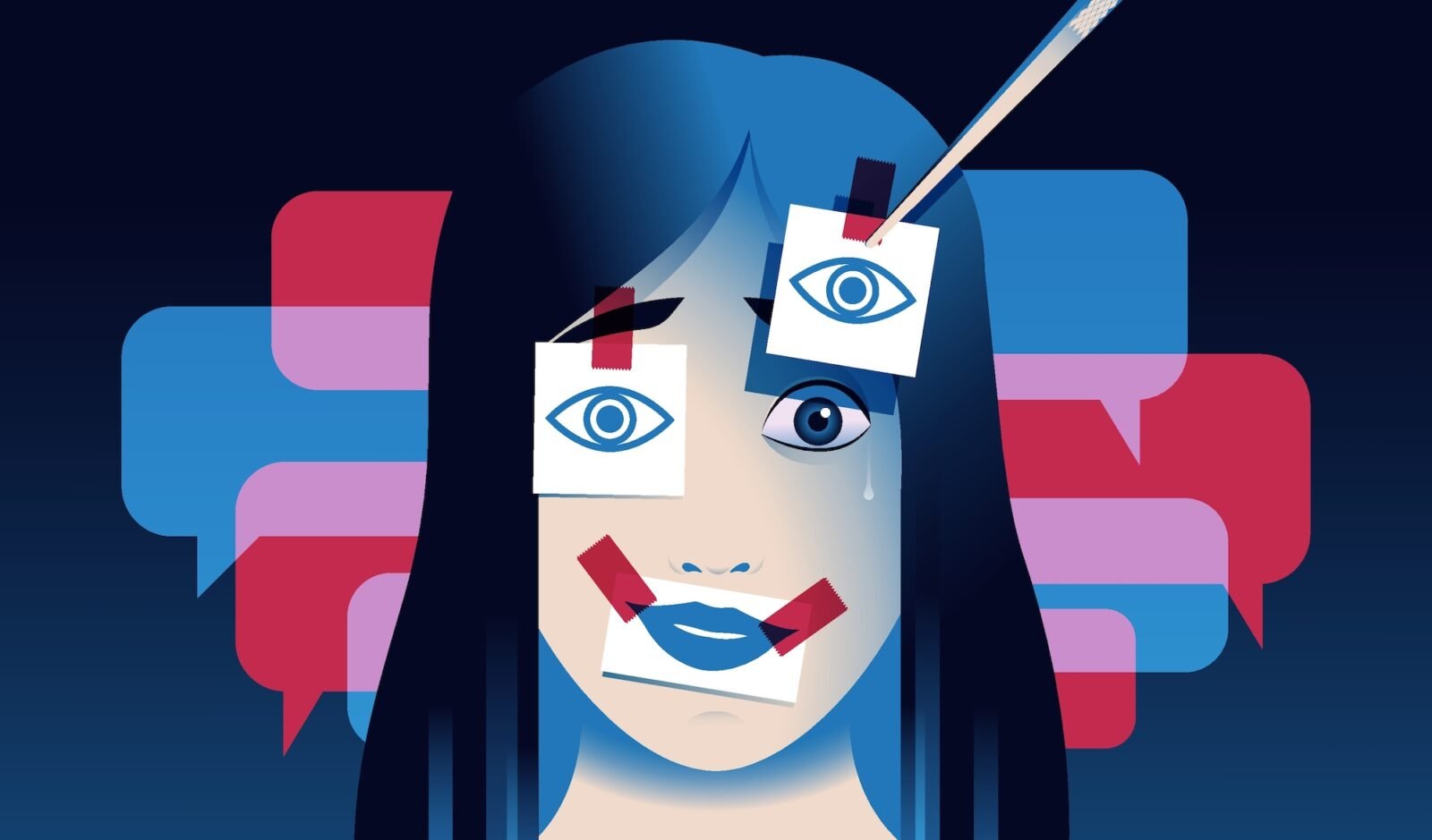

AI generated “deepfake” materials flood the internet, sometimes with dangerous results. In the past year, AI was used to make deceptive voice clones from a former US president and to spread fake, politically charged images Show children at natural disasters. In the meantime, non-consensual sexual images and videos generated by AI are leaving a trail of trauma that affects everyone of high school students To Taylor Swift. Large technology companies such as Microsoft And Meta have made some efforts to identify AI -manipulation authorities But with only muted success. Now governments are in trying to turn the tide with something they know quite a bit about: fines.

This week, legislators in Spain Advanced new legislation That would be companies up to $ 38.2 million paying or between 2 percent and 7 percent of their worldwide annual turnover if they do not correctly label AI-generated content. Within a few hours after that account that was signed, Wetgevers in South Dakota pushed their own legislation forward Try to impose civil and criminal punishments for individuals and groups that share deep fake that are intended to influence a political campaign. When it passes, South Dakota de 11th US State to adopt legislation that criminalizes Deepfakes Since 2019. All these laws use the threat of potentially tapped bank accounts as an enforcement lever.

According to ReutersThe Spanish bill follows the guidelines established by the wider EU AI Act that officially came into effect last year. In particular, this bill is intended to add punitive teeth to provisions in the AI law that impose stricter transparency requirements on certain AI tools that are considered ‘high risk’. Deepfakes fall into that category. The not good labeling of AI-generated content would be considered a ‘serious attack’.

“AI is a very powerful tool that can be used to improve our lives … or to spread wrong information,” said the Minister of Digital Transformation, Oscar Lopez of Spain in a statement Reuters.

In addition to the rules for the DeepFake labeling, the legislation also prohibits the use of so -called “subliminal techniques“Classified as vulnerable to certain groups. It would also place new limits to organizations that try to use biometric aids, such as face recognition to distract the breed or the political, religious or sexual orientation of individuals. The bill must still be approved by the Lower House of Spain to become a law. If this is the case, Spain becomes the first country in the EU to set legislation that enforce the Guidelines of the AI Act around Deepfakes. It can also serve as a template for other nations to follow.

A handful of American states takes the lead in the enforcement of DeepFake

The newly proposed South Dakota Bill, on the other hand, has been adjusted more closely. It requires that individuals or organizations label DeepFake content if it is political in nature and is created or shared within 90 days of an election. The version of the account that has demanded this week contains exemptions for newspapers, broadcasters and radio stations, which had Allegedly worried About possible legal liability for unintentionally sharing DeepFake content. The bill also contains an exception for deep fakes that ‘satire or parody’, a potentially wide and difficult to define care.

Nevertheless, the bill of South Dakota has weakened, the newest addition to a growing patchwork of state laws aimed at curbing the spread of deep fakes. Texas, New Mexico, Indiana and Oregon have all adopted legislation, specifically aimed at deepfakes designed to influence political campaigns. Much of these efforts gained strength in 2024 after a “digital clone” of the voice of President Joe Biden generated by AI called voters in New Hampshire, and urged them not to participate in the state’s presidential primary. The Biden Fakery was Allegedly commissioned By Steve Kramer, a political consultant who at the time worked for the campaign of the rival presidential candidate Dean Phillips. Phillips later condemned the deepfake and claimed that he was not responsible for it. The federal communication committee, meanwhile, Hit Krammer with a fine of $ 6 million alleged violation The truth in Caller ID Act.

I expect that the enforcement campaign of the FCC will send a strongly deterrent signal to anyone who could consider interfering the elections, whether it’s the use of illegal robocalls, artificial intelligence or other means, “New Hampshire Procurer -General John Formella said in a statement.

Four States –Florida” Louisiana” WashingtonAnd Mississippi—Have has established laws that criminalize the distribution of non-consensual, sexual content generated by AI. This type of material, sometimes referred to as “revenge porn” when aimed at an individual, is the most common form of harmful deep fake content that is already spreading wide online. An independent researcher creeks with Waded in 2023 Estimated that 244,625 DeepFake pornography videos for the past seven years to the Top 35 DeepFake -Porno websites had been uploaded. Almost half of those videos were uploaded in the last nine months of that period.

The increase in uploads suggests that easier to use, more convincing generative AI-tools combined with a lack of meaningful guarantee-not-consensual deepfakes more common. The laws also have a personal interest in the issue. A Study released last year The American Sunlight Project (ASP) discovered that one in six women in the congress was the target of sexual deep fakes generated by AI.

The efforts to start DeepFakes at the federal level have been in motion slower, although that is about to change. Earlier this month, First Lady Melania Trump expressed the support of the “Do the down act“A controversy Bill that it would make a federal crime to post non -consual intimate images (NCII) on social media platforms. If it is assumed, the bill would also require platforms to remove NCII content – and any duplicates – by 48 hours that it is reported. The Senate has already adopted the bill and could vote in the house in the coming weeks or months.

“It is heartbreaking to witness young teenagers, especially girls, struggling with the overwhelming challenges of malignant online content, such as deepfakes,” ” Said Melania Trump. “This poisonous environment can be seriously harmful.

The persistent problem with anti-deep laws

Although the purpose of limiting Deepfakes is commendable, critics are ensuring that some laws and bills that are being pursued go too far. The Electronic Frontier Foundation (EFF) has warned for years that that exaggerated Used in various state laws that are aimed at political deepens can be manipulated by a bad actor by criminalizing advertisements that simply use dramatic music or compile authentic video clips in a way that is damaged for a candidate. Eff also has a problem with the Take it Down Act and others like it, what it says Creates an incentive to falsely label legal speech As a non -consensual deepfake to have it censored.

“Although protecting victims of these horrible privacy invasions is a legitimate goal, good intentions are not enough to make good policy,” said Joe Mullin, senior policy analyst Joe Mullin.

The beginning of 2025 could mark a bending point in global efforts to combat deep fakes generated by AI. More European countries are likely to follow the management of Spain and propose new legislation that criminalizes the establishment or distribution of Deepfakes. Although the details of these laws can vary, they are partly united by the underlying frameworks set in the EU AI Act.

In the meantime, the US is about to pass its first federal bill that prohibits Deepfakes. In all these cases, however, it is still to be seen how effective legislators can exercise these new legal instruments. Deep pocket technology companies and political campaigns directed by the laws are likely to pursue legal challenges that tax government resources. The end result of those possible long legal fighting can determine whether the deep -food laws can actually do what they wanted to achieve without removing freedom of expression.

Leave a Reply